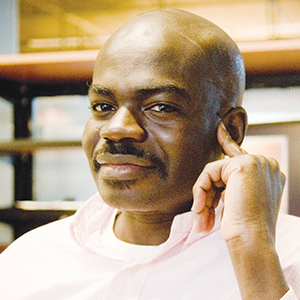

“The problem with computers is that there is not enough Africa in them.” Brian Eno, an experimental electronic musician, first uttered these words in 1995. Twenty years later, computer scientist Dr. Kwabena Boahen, a native of Ghana, repeated the mantra during a TED talk to help explain how his research seeks to make computers work more like our brains. After reading the quote, Boahen laughed, gathered himself and said, “Nobody was listening then, but now people are beginning to listen because there’s a pressing technological problem that we face.”

Boahen spoke of the dwindling applicability of Moore’s lawa now-vindicated prophecy made in 1965 by Intel co-founder Gordon Moore, who predicted that roughly every two years the number of transistors that could fit on a computer chip would double, allowing for smaller and smaller chips that compute faster and cost less.

But at a certain point, only so many transistors can fit on a chip. Now that wafer-thin devices can contain more computing power than the entire Apollo 11 mission, Stanford researchers like Boahen and Dr. Krishna Shenoy, a professor of electrical engineering and neurobiology, have begun to meld neurology and electrical engineering to tackle new problems.

The goals are as altruistic as curing diseases such as Parkinson’s and Alzheimer’s to restoring sight to the blind and granting the paralyzed the ability to walk again. And then there’s another goal on the horizonlinking our brains to computers so we can better understand and harness the power of our minds.

Brain Power

Boahen used his first computer as a teenager in Accra, the capital of Ghana. As he tinkered with the machine, he found that its linear operationfrom the input and central processing unit to the memory storageseemed overly cumbersome. Decades later, Boahen, a leader of bioengineering and electrical engineering, wants to create a computer that works more like the extremely efficient yet mysterious network housed in the brain.

Throughout his academic career, Boahen has attempted to infuse his native continent’s more naturalistic worldview into his research by drawing from biology to create so-called “neuromorphic” computer systems.

Harnessing the potential of such systems has only recently been recognized as critical. From the technology’s inception until very recently, the emphasis has been on making computers smaller and faster. But now, progress has butted up against physical limitations, as the available space through which electrons can pass in today’s chips has constricted while the energy demands have become exorbitant. The end of the Moore’s law era draws nigh.

As a result, computer scientists are gradually shifting their emphasis toward efficiency rather than miniaturization, according to Eric Kauderer-Abrams, a graduate student in Boahen’s lab. Brains take far less energy to work through their operations than computers, which makes evolutionary sense. Unlike computers, brains have to be fed. And the more energy they require, the less helpful they are to whichever creature is carrying it around.

When making calculations, neurons in our brains fire about every millisecond. Computer transistors, the digital equivalent of neurons, fire on the order of nanosecondsone million times faster. But getting to that level of speed requires about 100,000 times more energy.

Part of the reason: computers complete their calculations to a far higher level of accuracy than the human brain. They can describe the exact pigment of a pixel on a screen, whereas the human eye determines something is kinda-sorta brown and calls it a day.

Additionally, computers require more energy because of their basic way of operating. In computers, transistors switch on and off to generate binary information for processing. When they pass an electron through a circuit, that registers as a 1. When they don’t, that registers as a 0.

More or less, these operations happen one at a time, one after the other. So this long queue requires the computer to work extra hard to get all its calculations done perfectly and quickly. But occasionally, this high traffic through a narrow space results in the computer making an incorrect reading, which eventually crashes its operating system.

The human brain offers an appealing alternative precisely because it operates so differently. It appears to compute using networks of neurons that are redundantly linked together. Not only are these neurons working on the same problem at once, they’re also storing the memory in multiple places, If one part of the brain loses a piece of information, another can provide identical information in its placewhich, fortunately, keeps us from crashing.

Now seems like a good time to insert Kauderer-Abrams’ important disclaimer regarding the advance of neuromorphic computing: We have a long way to go because we do not fully understand how the brain goes about its business. We can approximate aspects of it and observe that it follows certain patterns found elsewhere in nature, but for the most part, Boahen’s lab is tugging on a string from a very large and tangled ball of yarn.

“It’s extremely humbling,” Kauderer-Abrams says. “It’s difficult to come to terms with the fact that we know so little. On the other hand, it’s incredibly inspiring. Even if I learn one tiny little fact, it never ceases to blow my mind.”

Mind over Matter

Although Boahen’s neuromorphic computers contain exciting possibilities, Shenoy’s work expands on the mind-boggling precision and power of the traditional method of computation. For 15 years, he has sought the secret to telekinesismoving objects merely by thinking about moving themby studying the brain waves in the motor cortex. (Shenoy calls it “brain-controlled prosthesis,” which is more precise, but, like, super boring.)

Already, Shenoy’s research has allowed three people with moving impairments to send a text message simply by thinking about where they’d like to move a cursor on a keyboardan ability never enjoyed by his grandfather.

“[He] suffered from multiple sclerosis for around 40 years,” Shenoy says. “He was wheelchair-bound. It was not like I ever had a conscious epiphany, ‘I want to help him,’ but I think it subconsciously influenced me greatly.”

Translating readings from the brain into an input for a computer that spits out corresponding motions isn’t an easy task, nor does it fit into any one field of study. So, Shenoy founded the Neural Prosthetic Systems Laboratory at Stanford and oversees a multidisciplinary team of researchers specializing in bioengineering, electrical engineering, neuroscience and more.

UCLA assistant professor Jonathan Kao worked in Shenoy’s lab from September 2010 until last March. An electrical engineer, he took one of Shenoy’s classes and was fascinated by looking at the machinations of the brain as a system similar to a computer. He reached out to Shenoy, who accepted him into his lab and had Kao assist him in translating jumbled readings of brain activity into something useful.

To do this, Stanford neurosurgeons overseen by Dr. Jaimie Henderson, a longtime collaborator of Shenoy’s, inserted the microscopic Utah electrode array into the brains of monkeys. Containing 100 electrodes, the array captured the signals of their motor cortex, which is astonishingly similar to that of human beings. Set in a simulated 3-D environment, the monkeys would reach for various dots in all directions. Then they were rewarded with juice.

Shenoy, Kao and others, including Paul Nuyujukian, matched these signals with the resulting movements. From the data, they developed algorithms to read these intentions and translate them into the motion of a cursor on a computer screen, the same way an autonomous car would read its environment to steer, brake or accelerate.

It’s harder than it sounds. The biggest challenge researchers encountered was that the brain appears to make commands with multiple neuronssome of which may be “noisier” than others, meaning that they contain less useful information, which can lead to inexact results.

“The cursor isn’t always going to go exactly where you want it because the algorithm isn’t perfect,” Kao says.

To improve it, the team observed neuron signals to determine which were the most reliable and least noisy. Then they filtered these results to privilege the responses of the most relevant neurons while still incorporating the readings from the messier, yet still helpful, neurons.

After several years of experiments, Nuyujukian and Kao had the monkeys hitting a target every second. Building upon Shenoy’s years of work, they passed on their research to the team in charge of human trials. Henderson implanted an electrode array into the brain of a 50-year-old man who had suffered for years with amyotrophic lateral sclerosis, better known as Lou Gehrig’s disease. The tense, bold surgery that allowed the man’s brain to be linked to a computer lasted four hours and resulted in a success.

A month later, the human trial team returned to see if they could replicate the proficiency achieved with the monkeys. After a few final tweaks, the program worked well enough for a human participantusing only his mindto send Henderson a text that read: “Let’s see your monkey do that!” The team popped a bottle of Dom Perignon to celebrate the milestone.

“It was definitely awe-inspiring,” Kao says. “To see thought provide someone with ALS with the ability to use a computer cursor at this level of control was really special.”

Dr. Robot

These advancements, while awe-inspiring, may seem limitedthey’re only used by a select few and likely won’t be perfected until way out in the future. But Stanford Medicine also hosts the Neurosurgical Simulation Lab, where surgeons currently navigate exact replicas of their patients’ brains by using virtual reality.

It’s a far cry from when Dr. Gary Steinberg started as a neurosurgeon in the 1980s, when he practiced on stone-cold cadavers and sliced open huge chunks of people’s skulls during live surgery to give himself room to operate.

“The idea was to expose as much as you can just in case something happens, but we’ve gotten much more sophisticated,” Steinberg says. “What we used to do when I trained was primitivemedieval compared to what we do now.”

Today, he plans surgeries, teaches students and reassures patients using exact simulations of a patient’s brain crafted from combined imaging from MRIs, CT scans and angiogramsa technology that has already begun changing neurosurgery.

Thanks to this lab, Steinberg no longer has to do the cumbersome mental gymnastics of imagining 2-D scans in three dimensions and planning surgeries with generic models. Now, he can remove bone from imaging, view the brain from different angles and appreciate minute details on critical arteries, tissues and veins, as well as fiber tracts, which connect the brain to the various parts of the body.

With this information, Steinberg can simulate his surgeries ahead of time with a patient to reassure them. He and fellow surgeons are plotting pathways to deeper parts of the brain and operating in areas previously thought unreachable. And during a procedure, Steinberg can lay the 3-D modeling over the live feed to add an even deeper layer of detail, which, in an area as sensitive as the brain, is crucial.

“It can make the difference between a patient that comes out normal and one who comes out with a paralysis or an inability to speak,” Steinberg says. “And it really draws [patients] in. In fact, we have a number of them who have come to Stanford as opposed to other institutions since we have this technology.”

With the days of wide-open craniotomies over, Steinberg says, he often makes just four millimeter insertions before performing surgeries with delicate instrumentsall while looking at live footage fed to him from microscopically detailed endoscopes. In the future, Steinberg expects surgeons to operate with 3-D goggles outfitted with virtual reality.

Already, the University of Calgary’s Dr. Garnette Sutherland has performed several successful neurosurgeries by manually controlling robotic instruments. Silicon Valley hosts El Camino Hospital and Good Samaritan Hospital, which both offer robot-assisted surgeries that carry the potential for less invasiveness and quicker recovery. But the Sunnyvale-based Intuitive Surgery has also faced lawsuits as procedures featuring their da Vinci surgery robots haven’t been accident-freealthough, of course, neither are surgeries done by hand.

Still, if the instruments can be controlled remotely, Steinberg says, it’s easy to speculate about surgeries performed by doctors who are miles and miles away.

“Thirty years from now, it’ll be hard to imagine what we’ll be doing,” he says.

Eric Kauderer-Abrams has a few educated guesses.

First off, there’s already a demand for Boahen’s computers as their energy-efficient, precise-enough results work perfectly for remote vehicles such as drones. Not only is it advantageous for drones to go as long as possible without charging, but the level of precision is fine for a flying machine that only needs to avoid obstacles, not fly exactly in between them.

Beyond that, the possibilities start getting pretty nuts.

“Ultimately, if you believe the brain is a physical device, which is a tenet of our scientific outlook on the world, then we should be able to communicate with it,” Kauderer-Abrams says. “Then it’s just a matter of how long it will take us to learn enough about how the brain represents information.”

In other words, if we can make computers like our brains, then we should be able to connect those computers to our brains. Already, Boahen’s lab has done some promising experiments with computers that mimic retinas. And since this style of computer has low power requirements, it’s possible for these computers to one day begin stimulating cells to send signals to the brain, giving sight to the blind.

And if it’s possible to send computer signals to the brain, theoretically it should also be possible to receive them from the brain in an intelligible fashion. For people with neurologically degenerative diseases like Parkinson’s or Alzheimer’s, researchers could then begin studying the way that their brains process information, giving them a better understanding of the disease and increasing the likelihood of a cure.

Of course, as we advance toward computers mimicking our brains, we could also edge closer to the mythical singularityin which artificial intelligence surpasses our own, recognizes humanity’s obvious shortcomings and wages a swift, brutal and successful war against us.

Kauderer-Abrams cautions against apocalyptic thinking, noting that computers won’t surpass us any time soon. But he believes computer-to-brain interfacing may be closer than we imagine.

“If we can really start to understand the brain in various ways,” Kauderer-Abrams says, “the benefit to human health will be massive.”